AI-Powered Phishing Detection and Malware Triage: Building an Explainable, Privacy-Preserving Defense Stack for Enterprises in 2025

Practical guide to building an explainable, privacy-first AI stack for phishing detection and malware triage in enterprise environments (2025).

AI-Powered Phishing Detection and Malware Triage: Building an Explainable, Privacy-Preserving Defense Stack for Enterprises in 2025

Intro

The enterprise threat surface has expanded: hybrid work, cloud collaboration, and supply-chain software increase the volume and complexity of phishing and malware. Manual triage can’t keep up. In 2025, defenders need AI that not only flags threats with high fidelity but explains decisions and preserves user privacy. This post is a practical blueprint for engineering such a stack: architecture, model choices, explainability patterns, privacy controls, deployment, and an operational checklist.

Why this matters

- Phishing remains the top initial access vector for breaches.

- Security teams prioritize explainability: analysts need human-readable reasons to act quickly.

- Privacy and compliance (GDPR, HIPAA, CCPA) require sensitive data minimization and auditable handling.

This guide assumes you build for enterprise scale: high volume, strict SLAs, and integration with SIEM/SOAR.

Architecture overview: layered, modular, auditable

Design principle: separate concerns. Build a pipeline with clear boundaries and audit points.

- Ingestion layer: collect email, URL click telemetry, endpoint alerts, attachment metadata. Do minimal parsing at source.

- Preprocessing & feature extraction: extract stable, normalized features—hashes, counts, embeddings—ideally at the edge or in a constrained enclave to minimize raw data exfiltration.

- Model orchestration: lightweight models (fast heuristics) at ingestion, heavyweight models (multimodal transformers, malware scanners) in a controlled environment.

- Explainability module: compute concise explanations alongside scores; persist explanations to support analyst workflows and audits.

- Privacy & storage: enforce retention policies, pseudonymize identifiers, and log model inputs/outputs with access controls.

- Integration & automation: SIEM, ticketing, SOAR playbooks, and feedback loops for human-in-the-loop labeling.

Component responsibilities

- Ingest: validate signatures, strip unnecessary headers, redaction rules.

- Extract: produce structured features and embeddings rather than full text where possible.

- Score: return score, confidence band, and compact explanation.

- Triage: apply policy engine to route to quarantine, automated remediation, or analyst queue.

- Feedback: capture analyst verdicts to feed model retraining and metrics.

Model design: multi-modal, hierarchical, and efficient

Phishing and malware signals are multi-modal: subject/body text, sender metadata, URLs, attachment characteristics (file type, entropy), and telemetry (process creation). Use a hierarchical approach:

- Fast filter: rule-based + small gradient-boosted model for immediate triage. Latency & throughput optimized.

- Deep scorer: transformer-based text model + binary classifier for attachments (static & dynamic features). Run asynchronously for high-risk items.

- Malware triage: static analysis (PE header, sections), YARA signatures, sandbox output embeddings, and an ensemble model to prioritize samples for manual analysis.

Empirical tips

- Keep embeddings small (128–512 dims) for storage and explainability.

- Use incremental model updates with warm-starts to avoid catastrophic drift.

- Cache reputation lookups and model outputs for at least a short TTL to reduce repeated scoring.

Explainability: actionable, not academic

Analysts need brief, causal-seeming reasons. Full SHAP dumps are noisy; build distilled explanations:

- Feature-level scores: return top 3 feature contributors (e.g.,

suspicious_tld,sender_domain_mismatch,attachment_entropy). - Natural-language rationale: template statements assembled from top features (e.g., “High-risk TLD and mismatched SPF/DKIM increased phishing score”).

- Artifact-level evidence: show the specific URL component or header sample that triggered the rule, with redaction where necessary.

- Confidence bands: provide model confidence and a reliability score based on recent drift metrics.

Explainability techniques

- SHAP or Integrated Gradients for deep models, then map numeric explanations to analyst-friendly labels.

- Attention visualization when using transformer models for email text: highlight words that contributed most.

- Counterfactual suggestions: “If the URL domain were known-good, score would drop by 0.45” to guide remediation.

Trade-offs

- Compute: exact SHAP is expensive. Use approximations or precompute explanations for items exceeding a risk threshold.

- Clarity: prefer concise rationales over exhaustive feature lists.

Privacy-preserving controls

Enterprises require data minimization and auditability.

- Edge preprocessing: compute features (e.g., URL parse, sender reputation hash) at mail gateways or endpoint agents and transmit only features and embeddings.

- Pseudonymization: replace user identifiers with stable, reversible tokens stored in a separate vault with strict RBAC.

- Differential privacy & FL: for cross-customer model improvements, use federated learning with secure aggregation or add calibrated noise before leaving client boundaries.

- Secure execution: run heavy, sensitive models in confidential compute (trusted enclaves) to avoid exposing raw attachments.

- Fine-grained retention: keep raw artifacts only while under active investigation; otherwise keep only features and hashed references.

Audit and compliance

- Immutable audit logs of model inputs, outputs, and explanations with tamper-evident storage.

- Data access workflows: analysts request full content with justifications and approvals logged.

Practical pipeline — code sketch

Below is a compact, practical Python-style sketch showing feature extraction and scoring. It’s intentionally minimal and avoids sending raw content upstream.

def extract_features(subject, body, headers, urls):

tokens = tokenize(subject + " " + body)

url_count = len(urls)

has_suspicious_tld = any(check_tld(u) for u in urls)

body_len = len(body)

embedding = embed_text(tokens) # vector from local encoder

features = [

("url_count", url_count),

("has_suspicious_tld", int(has_suspicious_tld)),

("body_len", body_len),

]

return features, embedding

def score_and_explain(model, explainer, features, embedding):

X = vectorize(features, embedding)

score = model.predict_proba(X)[1]

# Explainer returns a compact list of (feature, contribution)

explanation = explainer.shallow_explain(X)

# Distill to top-3 human labels

top_expl = sorted(explanation, key=lambda e: abs(e[1]), reverse=True)[:3]

return score, top_expl

This pattern keeps raw text local as much as possible, transmits a compact embedding and feature list, and returns a distilled explanation for analysts.

Deployment, monitoring, and metrics

Operationalize like any critical service:

- Metrics: precision, recall, false positive rate, time-to-remediate, analyst override rate, and explanation usefulness (feedback).

- Drift detection: monitor feature distributions, embedding drift, and model confidence shifts. Trigger retraining or gating if drift exceeds thresholds.

- Canary & phased rollout: A/B test model updates on small traffic slices; observe analyst impact before wide release.

- Retraining cadence: automate candidate selection from analyst-labeled queues and recent incidents; prefer continuous fine-tuning with snapshot validation sets.

Adversarial robustness and red-team testing

Attackers probe model boundaries. Harden with:

- Adversarial training: generate common evasions (obfuscated URLs, homoglyphs) and include them in training.

- Input sanitization: normalize Unicode, strip zero-width chars, canonicalize domains.

- Threat hunting: schedule red-team simulations and inject realistic phishing samples into the pipeline.

- Poisoning defenses: validate training labels, use robust estimators, and limit contributions used for model updates.

Human-in-the-loop triage workflows

Automation should accelerate analysts, not replace judgment.

- Confidence thresholds: auto-quarantine when score & reliability exceed high thresholds; route medium-risk to analyst queues.

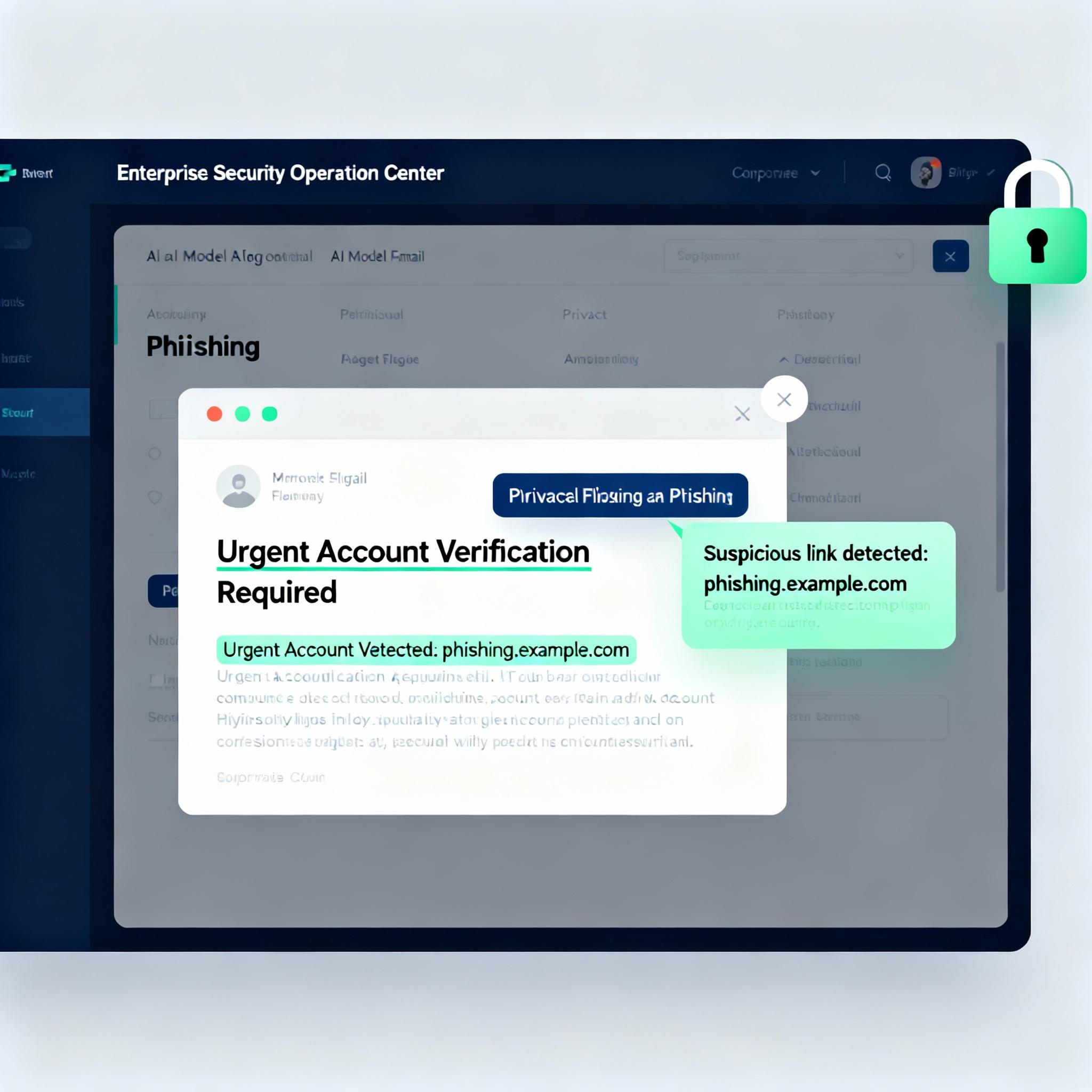

- Explainability-first triage: show top-3 reasons and quick actions (quarantine sender, block URL, escalate to malware lab).

- Feedback capture: record analyst decisions with minimal friction to improve models and reduce false positives.

Summary and checklist

Implementation checklist for engineering teams:

- Architecture

- Separate ingestion, feature extraction, scoring, explainability, and triage services.

- Ensure immutable logging for audit.

- Models

- Use hierarchical models: fast filters + deep scorers.

- Keep embeddings compact and versioned.

- Explainability

- Return distilled top-3 feature reasons and a templated natural-language rationale.

- Precompute or approximate explanations for high-risk items.

- Privacy

- Preprocess at edge; transmit features/embeddings, not raw content, where feasible.

- Implement pseudonymization, retention policies, and confidential compute for heavy analysis.

- Ops

- Monitor precision/recall, drift, and analyst override rates.

- Canary new models, and automate retraining with labeled feedback.

- Robustness

- Normalize inputs, adversarially train, and conduct red-team exercises.

Final note

In 2025, the best defense mixes AI speed with human judgment, all while respecting privacy. Build modular pipelines that produce concise, actionable explanations. Prioritize auditability and data minimization so your detection stack scales without compromising compliance or analyst trust.