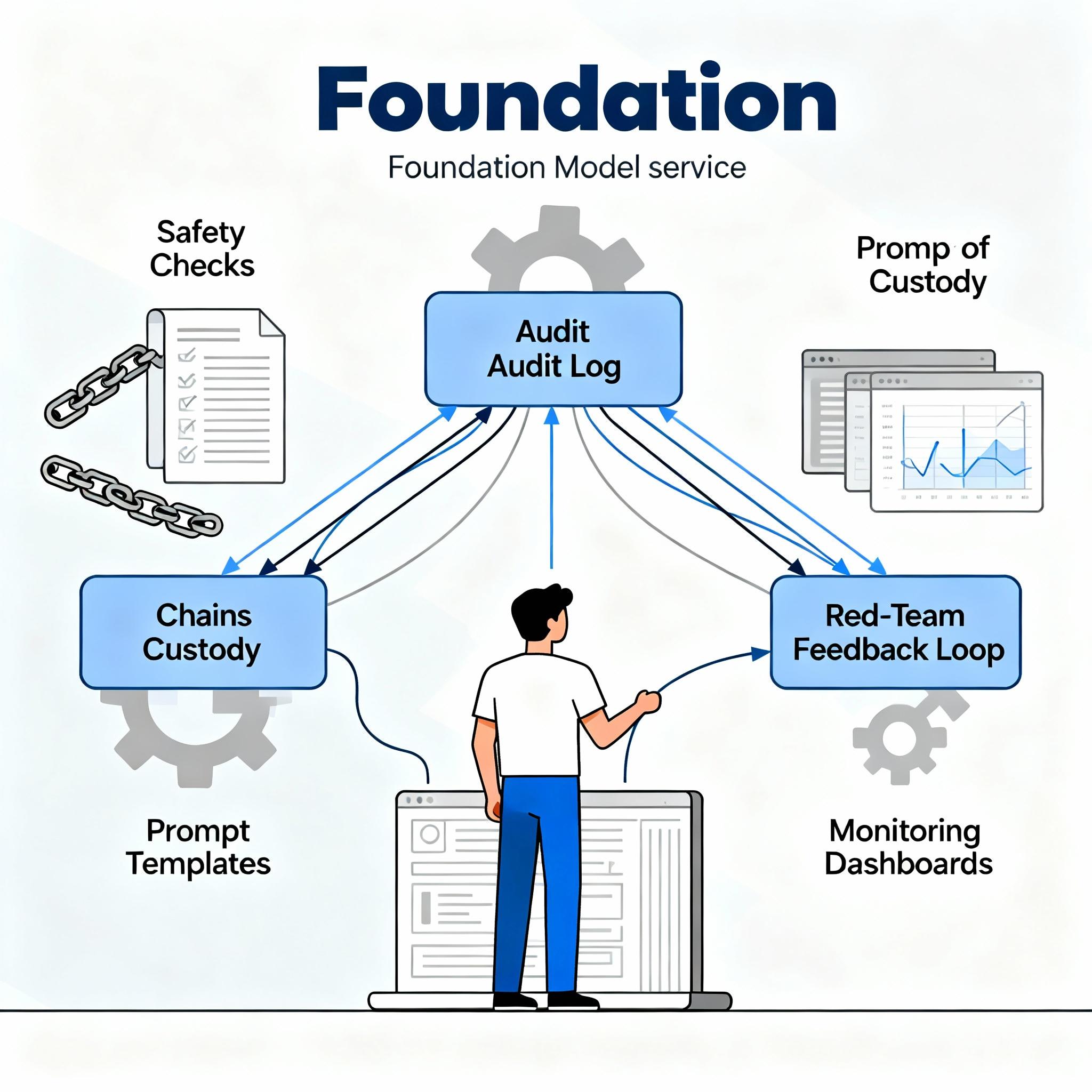

Guardrails in practice: A developer’s playbook for secure, auditable foundation model deployments (data provenance, prompt safety, and red-teaming)

Practical guide for developers to build guardrails around foundation models: data provenance, prompt safety, and automated red-teaming for secure, auditable deployments.

Guardrails in practice: A developer’s playbook for secure, auditable foundation model deployments (data provenance, prompt safety, and red-teaming)

Deploying foundation models into production without guardrails is asking for incidents and compliance headaches. This post is a developer-focused playbook: specific controls you can implement today to make model behavior auditable, inputs safe, and systems resilient to adversarial probes. No fluff — concrete patterns, an end-to-end example, and a checklist you can apply to your API or service.

What this guide covers

- A compact threat model and goals for guardrails

- Provenance and lineage: capture what matters for audits and incident investigations

- Prompt safety and input validation: runtime checks and sanitizers

- Automated red-teaming and monitoring: continuous adversarial testing and telemetry

- End-to-end example with code for an audit logging middleware

Audience: engineers running model inference behind APIs, MLOps/DevOps, and security engineers advising AI services.

Threat model and objectives

You’re protecting against three practical risks:

- Undesired outputs: hallucinations, leaks of sensitive data, or content that violates policy.

- Privilege escalation via prompt injection: attackers controlling or influencing prompts to reveal secrets or change behavior.

- Compliance and forensics gaps: inability to trace how a problematic output was generated.

Core objectives for guardrails:

- Make every inference auditable — record the chain of custody for prompts and data.

- Stop malicious or harmful inputs before they reach the model when possible.

- Continuously probe your deployed surface with adversarial tests and use results to harden controls.

Data provenance and lineage — what to capture and why

Provenance is forensic fuel. If a customer reports a bad output, you need to reconstruct the path that created it.

At minimum, capture these fields for every inference request:

request_id— globally unique identifier for the transaction.timestamp— ISO 8601 UTC.user_idor session context — to scope investigations.input_hash— stable hash of the prompt/user data.prompt_template_id— if you use templates, store the template reference and the filled template.modelandmodel_version— exact model identifier.system_instructions— any system or assistant messages fed into the model.tool_calls— if your pipeline invokes tools or retrieval systems, log the tool name, inputs, and outputs.response(or response_hash) — for privacy, you can store the hash and optionally the full output if retention allows.safety_flags— results of runtime checks like toxicity or PII detection.

Why hashes? Storing input_hash and response_hash lets you prove content existed without persisting sensitive text in plain form. But logs should be selectable per compliance needs: some audits require full text retention.

Practical storage: push provenance records into a write-once store (append-only), and index by request_id, user_id, and timestamp. Use object storage for bulky artifacts (retrieval results, embeddings) and store pointers in the log.

Prompt safety and input validation — runtime patterns

Guardrails should run as close to the input source as possible. Implement layered checks:

- Client-side schema validation: enforce types, sizes, and allowed fields before sending requests to the server.

- Server-side sanitization and allowlisting: normalize inputs, remove unexpected control characters, and enforce maximum prompt length.

- Prompt templates with explicit placeholders: avoid concatenating raw user text into system-level instructions.

- Runtime safety filters: run quick classifiers to detect PII, toxicity, or prompt injection patterns and reject or rewrite requests.

Example policies to enforce:

- Reject requests where user-input contains sequences used to close or modify system instructions (common prompt injection patterns).

- Strip or escape markup and shell-like constructs when you use generated outputs in command execution.

- Require user intent confirmation for high-risk actions.

Prompt templating pattern

Use explicit, minimal templates and attach metadata rather than embedding policies in user-controlled data. Example template elements:

system_instructions— immutable, audited guidance for the model.user_message— sanitized user text.context— curated, retrieval-augmented content with provenance pointers.

Treat templates as first-class versioned artifacts. When you update a template, bump prompt_template_id and capture diffs in your provenance logs.

Automated red-teaming and monitoring

Red-teaming should be continuous and automated. Key components:

- Fuzzer pool: a set of adversarial generation routines producing injection attempts, malicious prompts, and edge cases.

- Canary tests: small synthetic queries that should always be blocked or handled safely.

- Drift detection: telemetry that alerts when distributions of inputs or model outputs change meaningfully.

- Feedback loop: failed red-team cases create tickets, trigger template updates, or refine safety classifiers.

Implement a scheduled job that runs your latest model and prompt configuration against the fuzzer pool. Record all results in the same provenance store and tag failing cases with priority.

End-to-end example: audit-logging middleware

Below is a compact example of a server-side middleware pattern that captures provenance, runs a safety check, and forwards the request. It’s pseudocode-like but directly translatable to Python/Node servers.

# Middleware: audit log + safety check

def handle_inference_request(request):

request_id = generate_uuid()

timestamp = now_utc().isoformat()

# Basic schema checks

if 'user_id' not in request:

return error(400, 'missing user_id')

user_id = request['user_id']

raw_input = request.get('input', '')

# Sanitize and normalize

sanitized_input = sanitize_text(raw_input)

# Compute hashes for audit trail

input_hash = sha256(sanitized_input)

# Run fast safety classifiers (PII, toxicity, prompt-injection patterns)

safety_flags = run_fast_safety_checks(sanitized_input)

if safety_flags.get('block'):

log_provenance(request_id, timestamp, user_id, input_hash, model=None, safety_flags=safety_flags)

return error(403, 'input blocked by policy')

# Construct prompt from template (versioned)

prompt_template_id = get_active_template_id()

prompt = fill_template(prompt_template_id, sanitized_input)

# Record the provenance before dispatching to the model

provenance = {

'request_id': request_id,

'timestamp': timestamp,

'user_id': user_id,

'input_hash': input_hash,

'prompt_template_id': prompt_template_id,

'system_instructions_id': get_system_instructions_id(),

'model': get_model_id(),

'safety_flags': safety_flags

}

append_audit_log(provenance)

# Call model

response = call_model(prompt)

response_hash = sha256(response)

# Finalize audit with response info

append_audit_log({'request_id': request_id, 'response_hash': response_hash, 'full_response': maybe_store(response)})

return success(response)

Notes on the example:

sanitize_textshould remove control sequences and normalize whitespace. Stronger sanitizers can strip suspicious tokens.run_fast_safety_checksare intentionally lightweight classifiers that run in milliseconds to avoid latency spikes.append_audit_logwrites to an append-only store; include RBAC so only authorized services can write.maybe_storecontrols retention policies: you might only store a hash unless the request meets criteria for full retention.

Red-teaming pipeline snippet (concept)

Integrate these steps as a scheduled pipeline:

- Generate adversarial inputs from your fuzzer pool.

- Send them through the production stack (or a staging mirror).

- Collect safety flags and outputs into a red-team dataset.

- Prioritize failures by severity and escalate to template or policy owners.

Automate remediation where safe — for example, update the fast safety classifier’s allow/block lists or add a template escape. For high-risk failures, require human review.

Observability and alerting

Monitor these signals:

- Rate of safety-flagged requests per minute.

- Fraction of requests blocked by prompt-injection detectors.

- Drift in output embeddings or classification labels (indicates model or data drift).

- Latency spikes correlated with safety checks.

Add alert thresholds that combine signal and velocity; a sudden spike in blocked requests is higher priority than a steady low rate.

Summary checklist — deployable guardrails

- Implement append-only audit logs with

request_id,timestamp,input_hash,prompt_template_id,model_version, andsafety_flags. - Version and store prompt templates and system instructions; record template IDs in provenance.

- Add server-side sanitization and fast safety classifiers that can block or quarantine requests.

- Use prompt templates with explicit placeholders and minimize direct embedding of user-controlled content into system instructions.

- Build an automated red-team pipeline that runs adversarial inputs against staging or mirrored production and records outcomes to the audit store.

- Retain artifacts according to compliance policies; use hashes when you cannot retain full text.

- Monitor safety flags, drift metrics, and red-team failure rates; wire alerts to on-call and policy owners.

Final notes

Guardrails are not a single tool — they’re a tight feedback loop between runtime controls, provenance, and continuous adversarial testing. Start by capturing clean, immutable provenance: once you can reconstruct incidents reliably, safety and red-team work becomes tractable. Implement quick, fast checks at inference time, and push expensive analysis into asynchronous pipelines that feed back into templates and policies.

Ship small, iterate fast: a simple audit log and a fast PII/toxicity filter will prevent many problems. Then scale to full red-teaming and versioned prompt governance.

Implement this playbook incrementally, and you’ll move from reactive incident response to a proactive, auditable safety posture for foundation model deployments.