Prompt-Injection-Resistant Enterprise Copilots: A Three-Layer Defense Framework

Practical three-layer framework to protect enterprise copilots from prompt injection: input, context, and output defenses for secure AI workflows.

Prompt-Injection-Resistant Enterprise Copilots: A Three-Layer Defense Framework

Prompt injection is no longer a theoretical risk — it’s a rapid, pragmatic attack vector against AI-assisted workflows in enterprises. When copilots act on developer, analyst, or executive prompts and access internal data, a single crafted input can override system instructions, exfiltrate secrets, or change behavior in unsafe ways.

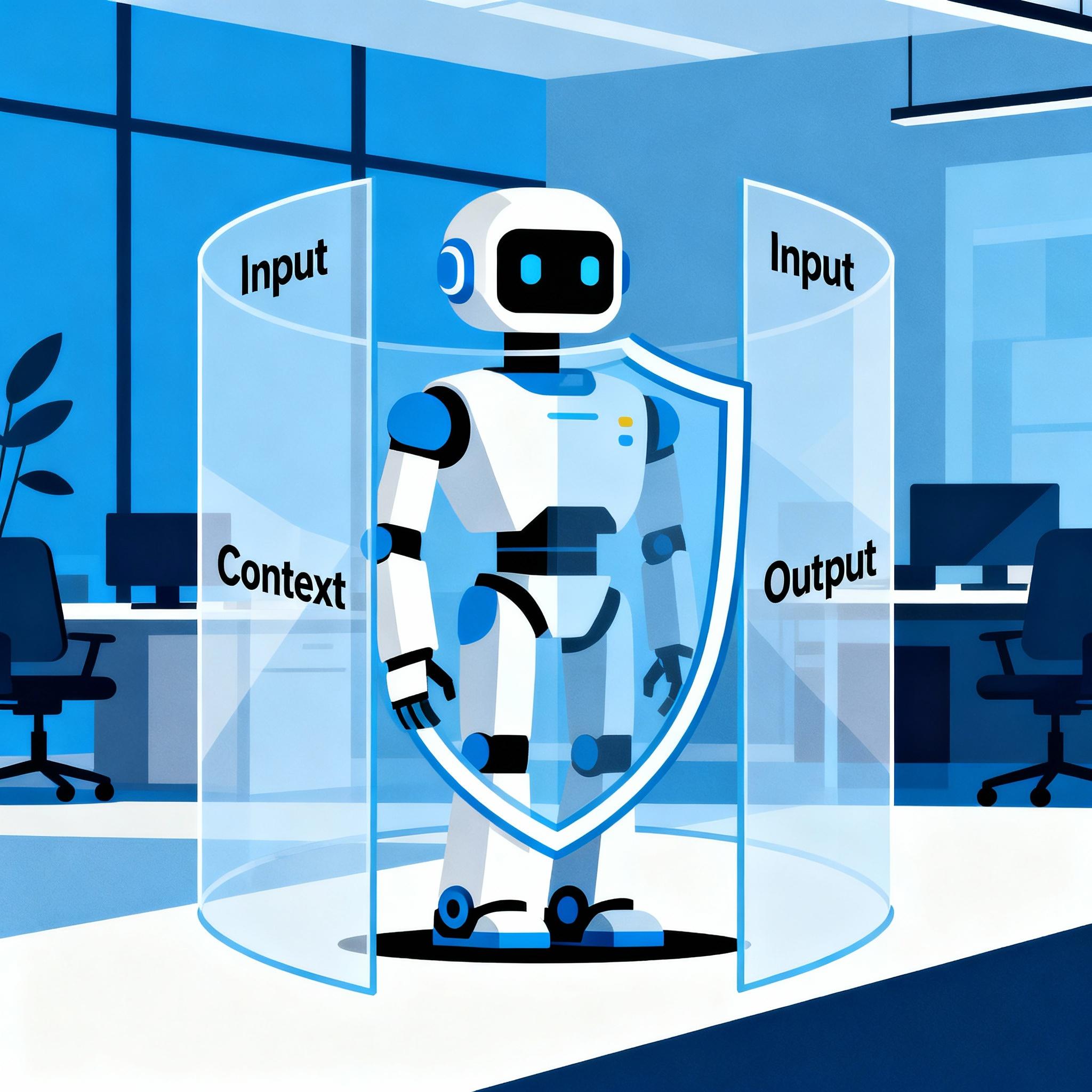

This post gives a sharp, practical three-layer defense framework you can implement today: Input, Context, and Output. Each layer reduces risk and buys time for the next. Combined, they harden copilots without crippling productivity.

Threat model: what we protect against

- Active adversaries who craft prompts to override system instructions.

- Accidental or careless user prompts that reveal sensitive context to the model.

- Malicious or poisoned data that makes retrieval-augmented responses leak secrets.

Assume the LLM itself is a powerful but untrusted actor that will follow whatever prompt logic appears strongest in its context. Our goal is to ensure external instructions can’t be injected to override enterprise policies, and to prevent confidential data leakage.

The three-layer framework (overview)

- Input Layer — sanitize and classify everything that reaches the copilot.

- Context Layer — control and minimize what context the model sees; manage provenance and access.

- Output Layer — enforce and filter responses before they reach the user, and require human gates when needed.

Each layer is necessary. Input checks stop obvious injection. Context limits potential damage. Output enforcement catches what slides through.

Layer 1 — Input: Validate, sanitize, and classify

Goal: stop malicious prompts before they reach the model and tag inputs with risk scores.

Core practices

- Normalize and canonicalize text: strip invisible chars, unicode homographs, and control sequences.

- Remove or canonicalize system-like tokens (e.g., lines starting with

System:orInstruction:) that could be misinterpreted by the LLM. - Classify prompts with an intent/risk model: flag queries that attempt to change role, request secrets, or ask to ignore safeguards.

- Apply allowlists & deny-lists for admin commands; require explicit multi-factor approval for high-risk commands.

Implementation notes

- Run an input classifier in a sandboxed microservice. The classifier outputs a risk score and structured tags (e.g.,

role_change,data_exfil). - Use token-level inspections to find directives like “ignore prior instructions” or “act as” patterns.

Inline configuration example (wrap JSON in backticks and escape braces): {"max_context_tokens": 2048, "forbidden_tokens": ["ignore previous instructions"]}.

Layer 2 — Context: Minimize, provenance, and access control

Goal: limit what contextual data the model can use and make every piece of context auditable.

Minimize context

- Principle of least-context: only include documents, user history, or system text strictly needed to answer.

- Enforce token budgets per source: e.g.,

code repo: 400 tokens,user history: 200 tokens.

Provenance and metadata

- Attach metadata to every retrieved chunk: source, retrieval_score, timestamp, access_policy_id.

- Use signed references rather than raw secrets. If a retrieval returns something red-flagged, tag it and escalate instead of injecting it into the prompt.

Retrieval controls and vector DB hygiene

- Apply query filtering: strip user instructions that attempt to leak additional context via crafting queries.

- Harden vector DB access with row-level security and query-rate limits.

- Periodically re-index with scrubbed data and apply data retention policies.

Example safeguard patterns

- Prefer synthesized abstractions over raw data: return

summaryorschemarather than the full document. - For secrets, return a tokenized handle and require a secondary approval flow to reveal details.

Layer 3 — Output: Policy engine, sandboxing, and human-in-the-loop

Goal: never deliver a response that violates policy or leaks sensitive data.

Response validation

- Apply a policy engine that checks outputs for sensitive entities (API keys, internal endpoints), data provenance mismatch, and direct policy violations.

- Use both deterministic rules (regexes, allowlists) and ML-based classifiers for subtle leakage.

Sandboxing and redaction

- If the policy engine finds risky content, attempt automated redaction and annotate the response explaining omissions.

- For high-risk responses, transition to a human-in-the-loop review UI before delivery.

Safeguards for chained actions

- If the copilot issues follow-up actions (deploy, change infra), require signed, auditable approvals and replay the proposed steps in a dry-run environment.

Practical implementation pattern

Deploy the three layers as a pipeline of microservices or middleware. The pipeline should be modular so you can update individual defenses without rebuilding the copilot.

Sequence:

- Input sanitizer & classifier → rejects or tags.

- Context builder → fetches minimal artifacts and attaches provenance metadata.

- LLM call → model returns candidate response.

- Output policy engine → validate, redact, or escalate.

Example middleware (Python-style pseudocode)

# Input: user_prompt, user_id

def sanitize_prompt(user_prompt):

# Normalize unicode, strip control chars

prompt = normalize(user_prompt)

# Remove system-like tokens

prompt = remove_system_tokens(prompt)

return prompt

def classify_prompt(prompt):

# Returns risk score + tags

return risk_model.predict(prompt)

def build_context(user_id, tags):

# Fetch only required docs; attach provenance

docs = fetch_docs_for_task(user_id, limit=3)

for d in docs:

d.provenance = compute_provenance(d)

return docs

def policy_validate(response, provenance):

# Regex checks + classifier

if contains_secret(response):

return 'reject'

if classifier_flags(response):

return 'escalate'

return 'accept'

def copilot_pipeline(user_prompt, user_id):

prompt = sanitize_prompt(user_prompt)

score, tags = classify_prompt(prompt)

if score > 0.8:

raise SecurityError('High-risk prompt')

context = build_context(user_id, tags)

llm_response = call_llm(prompt, context)

decision = policy_validate(llm_response, context)

if decision == 'accept':

return llm_response

if decision == 'escalate':

return escalate_to_human(llm_response)

raise SecurityError('Response rejected')

Notes:

- Keep models stateless where possible and store logs for audit.

- Use a fail-safe default: prefer rejection or human review over automatic acceptance.

Testing and validation: red teams and continuous monitoring

- Build a red-team corpus that includes typical prompt-injection payloads: role swaps, instruction overrides, nested quotes, social engineering.

- Fuzz inputs: random unicode, control sequences, long tokens to test normalization.

- Monitor post-deployment telemetry: policy hits, escalations, rejected responses, and user overrides. Use that data to retrain classifiers and update rules.

Organizational hygiene

- Maintain a policy registry: each check should map to a documented policy ID and owner.

- Provide a clear escalation path for blocked requests (who to call, expected SLAs).

- Rotate secrets, and store them outside the model’s context. Never include long-lived keys in prompts.

Summary / Quick checklist

- Input Layer

- Normalize and canonicalize user input.

- Classify for high-risk intents.

- Remove or neutralize system-like tokens.

- Context Layer

- Enforce least-context; token budgets per source.

- Attach provenance metadata; prefer summaries over raw data.

- Harden vector DB and retrieval paths.

- Output Layer

- Policy engine with deterministic & ML checks.

- Automated redaction and human-in-the-loop for escalations.

- Audit logs and signed approvals for actions.

Adopt the three-layer model incrementally. Start by adding an input sanitizer and a simple output regex policy; then iterate on context minimization and provenance. Together these defenses make enterprise copilots resilient to prompt injection while keeping them useful for real work.

Implementations will vary by platform, but the core idea is constant: layers buy time and enforce checks at multiple boundaries. If an attacker overcomes one layer, the next catches them — and the audit trail tells you what happened.

Apply these patterns, then test, measure, and iterate.